The AI Cheating Wave

Have students' opportunities to cheat on assignments become too wide open?

Along with the unprecedented power of AI language models comes a growing concern.

The ease with which students can exploit these tools for academic dishonesty.

Concerns about AI have developed rapidly in recent months, due to the popularity of the ChatGPT bot, which has passed examinations and made it easier for students to cheat.

For many students it has now become an unprecedented shortcut to generate essays, complete assignments, and even provide seemingly original answers on exams, blurring the boundaries of academic integrity.

In a letter to The Times, with more than 60 signatures from senior education figures said: “Schools are bewildered by the very fast rate of change in AI and seek secure guidance on the best way forward, but whose advice can we trust? We have no confidence that the large digital companies will be capable of regulating themselves in the interests of students, staff and schools and in the past the government has not shown itself capable or willing to do so.”

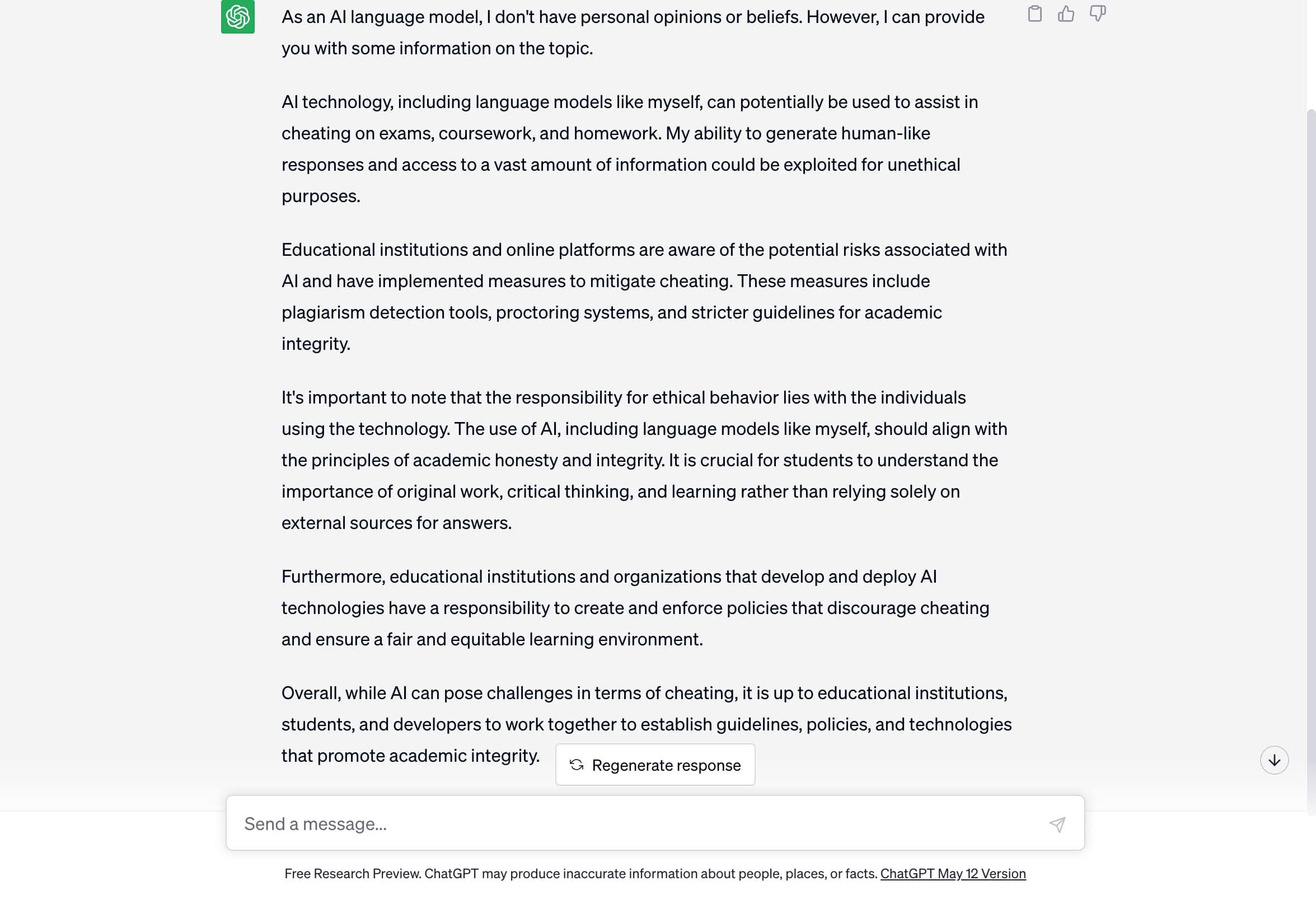

Do you think you will be an issue in making it easier and also unidentifiable for students to cheat in exams, coursework and homework?

Do you think you will be an issue in making it easier and also unidentifiable for students to cheat in exams, coursework and homework?

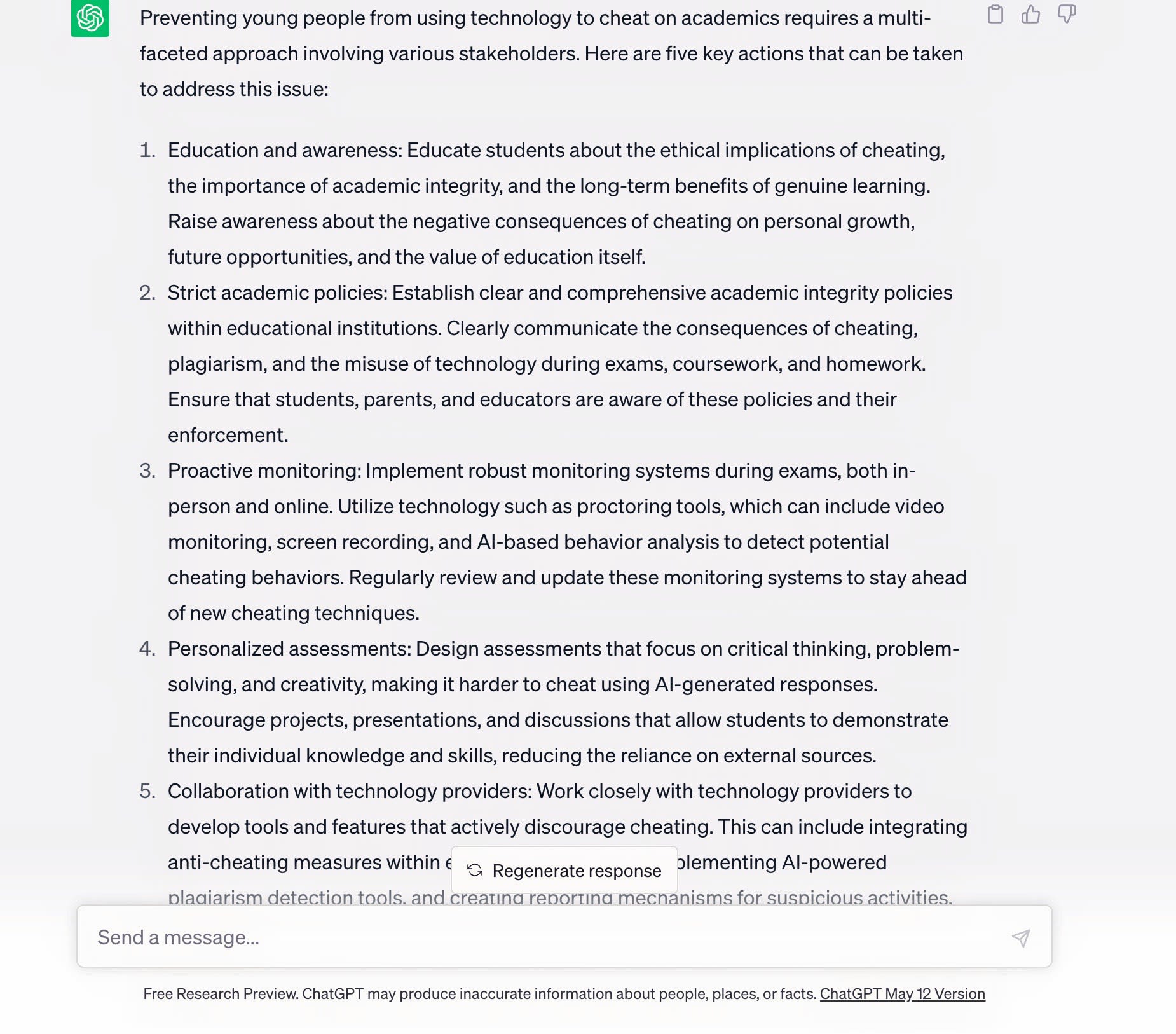

What should be done to stop young people using this technology to cheat on academics.

What should be done to stop young people using this technology to cheat on academics.

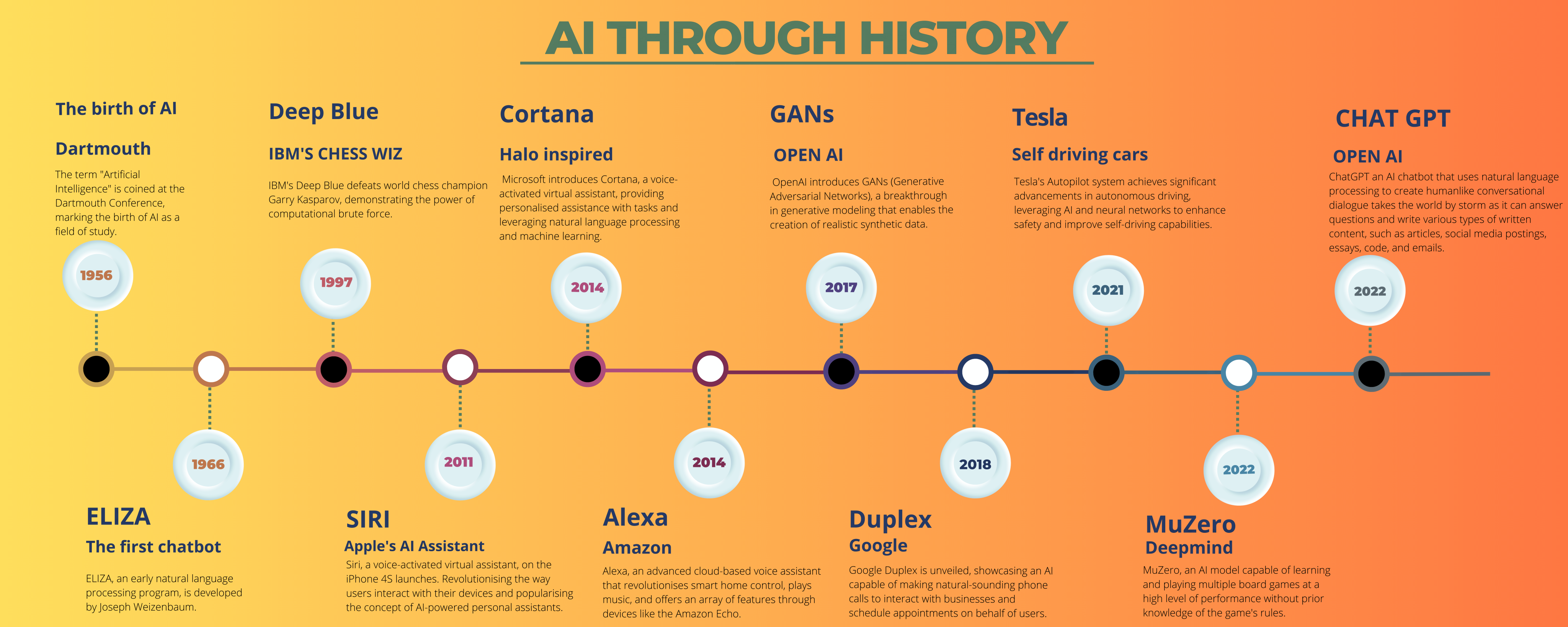

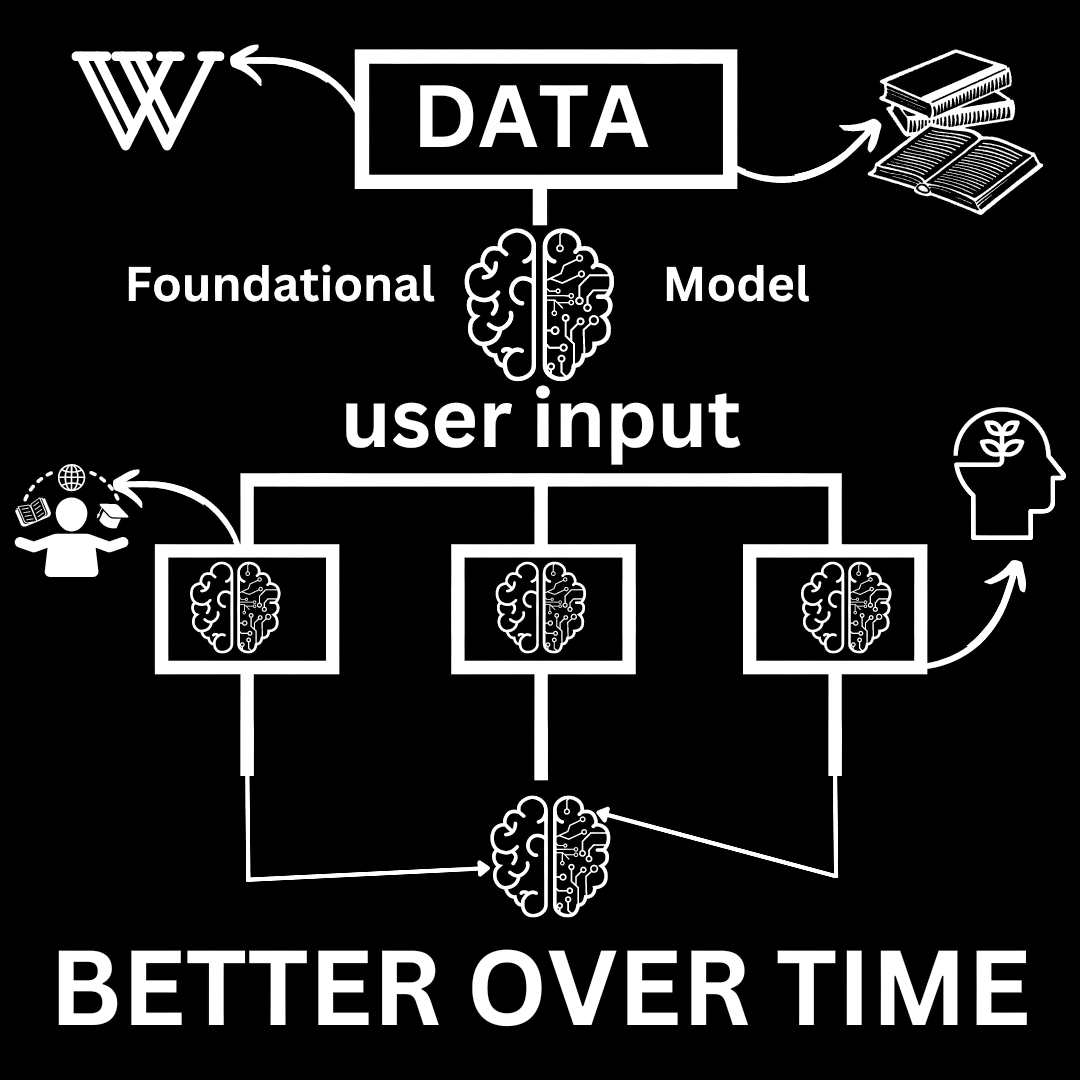

AI language models have emerged as transformative tools in an era characterised by technology developments, revolutionising the way people interact with language and information but to understand the problems let's go back in time to understand how they originated.

These models' amazing capacity to produce content that is coherent, contextually relevant, and frequently indistinguishable from human writing is enabled by cutting-edge developments in natural language processing and machine learning.

The journey traces back to the early days of natural language processing and machine learning.

In 1956, John McCarthy coined the term "Artificial Intelligence" and organised the seminal Dartmouth Conference, which brought together leading minds to discuss and explore the potential of machine intelligence.

Researchers and engineers worked tirelessly to develop algorithms and models capable of understanding and generating human-like text.

The widespread adoption of AI language models has found applications in various domains from content generation, customer service chatbots, language translation, and even self driving cars.

Model such as Siri and Alexa have become huge in everyday life globally for many users of the virtual assistants.

Recently the model, Chat GPT has made headlines across the globe with it's abilities to make humanlike conversational dialogue.

But just how do they work?

Technology expert Omri Allouche, who has a thorough understanding of AI language models like MyAi and Chat GPT breaks down how the models use human like behaviour to respond to users.

This image was generated using AI - it shows a frustrated teacher marking homework that students submitted using AI.

This image was generated using AI - it shows a frustrated teacher marking homework that students submitted using AI.

This image was generated with AI - I asked it to create me a image of Jonathan marketing homework of students and being confused at it due to it being a student's who uses AI to cheat

This image was generated with AI - I asked it to create me a image of Jonathan marketing homework of students and being confused at it due to it being a student's who uses AI to cheat

But, with these developments comes a problem that has caused uproar in academic institutions.

Cheating. It's being used on exams, coursework and even homework all the way from primary schools right up through to universities.

Manchester Metropolitan University's digital skills coach, Caithy Walker spoke to me about just how hard it's becoming to spot cheating in her classrooms due to Chat GPT.

"It's impossible to tell wether a student has used it or not. The essays that get handed in are a lot better all of a sudden and grades are jumping up incredibly fast.

We know it's not a students work as the language they use is so different to before but as a teacher, we can't prove it and that makes it much worse.

It worries me as it's unfair on students who work so hard to then have others use AI and a quick search to get the same grade."

Jonathan Wise, an English teacher at William Hulme's Grammar School think that AI can be useful but needs restrictions.

"One of my students submitted homework to me and it was for his creative writing GCSE mock. It was written in American English and I knew right away the student had used AI."

"When you see a student grow from their first day at high school to 4 years down the line, you know how they write.

It has been useful to guide students on ways to revise which I promote to them. It can make flashcard ideas and other notes. For students this is really helpful to have the guidance but when it's used to cheat it does more harm than good.

"I always say to students, you can't cheat or use ChatGPT in the exam hall. So your only harming yourself.

The creators of the model should ban it from giving responses to students using it to cheat. They could just show students a way to the answer instead.

It's a tool to use in a good way and there's no stopping it but we have to work with it to create positive outcomes not cheating students."

Why are students turning to these models and what do they think of cheating through using it?

Holly Woodwin speaks about her thoughts on AI being used in academics.

Elias Osligt talks about how he uses Chat GPT for his coursework at University and how he thinks it's good for the most part but does have some limitations

Whilst AI tech continues to grow. Many worry about the safety measures that are in place, even its creators too. Tech billionaire, Elon Musk recently co-signed a letter asking for further AI developments to be put on hold until effective measures are in place.

Google chief Sundar Pichai also warned that the wrong deployment of rapidly advancing artificial intelligence technology can “cause a lot of harm” on a “societal level”. He says he doesn't 'fully understand' how the company's new AI program 'Bard' exactly works either.

The AI apocalypse... the end is near!